Generative AI is a transformative force currently reshaping the landscape of K–12 education. As stewards of a rapidly changing system, this moment calls for more than curiosity from district leaders; it requires a clear-eyed, strategic response. The rapid acceleration of AI and its reach into every aspect of teaching, learning, and leadership demands deliberate leadership. Leaders must make a commitment to vision-setting, ethical modeling, and system-wide learning.

The question is no longer if AI will impact education—but how we will shape its role in service of students, equity, and learning. As guardians of long-term vision and student outcomes, district administrators must lead the conversation around how AI fits into their community’s values, organizational systems, and educational goals. They must lead intentionally, ethically, and with collective purpose. To effectively prepare teachers and students for the future, our k12 leaders must demonstrate vision, guide effective change management, prioritize upskilling, and model responsible AI usage.

Leading with Vision

Educational leaders should first develop a clear vision explaining the importance and relevance of generative AI tools or policies within their district context. The vision should be rooted in the overarching mission and vision of the school district and resist any current trends in particular AI tools. Focusing solely on AI tools can lead to misaligned and ineffective educational initiatives, similar to what has happened with other new technologies and digital tools over several decades. Prioritizing the district’s mission and vision ensures generative AI strategically supports broader educational goals, ultimately benefiting students.

District leaders should be individually and collectively discussing the following:

- What is the district’s “why” for exploring generative AI?

- How does AI relate to our core values as a community?

- How can AI improve learning outcomes, increase access, and/or close opportunity gaps for students?

- What does responsible innovation look like in our community?

- How can we bring all voices to the table—teachers, students, families, and community partners—to co-create that vision?

Embracing Change Management

Implementing generative AI isn’t a technology project—it is the responsibility of the school community. It impacts curriculum, operations, assessment, communication, and data governance. And while it can be easier to relegate AI to a technology department, it will never be adopted in purposeful, safe, and responsible ways without the engagement of the whole system. That’s why change management is essential.

District and school leaders must prioritize the following actions as part of a change management process:

- Create a shared sense of urgency without resorting to fear. What is your “compelling why” for integrating generative AI in your district and/or school?

- Build a guiding coalition that includes instructional and technical voices. As you do, consider whose voices are missing and be intentional about including them.

- Plan for iterative rollout, not a single “launch moment.” Many districts are staging how generative AI is implemented – often beginning with leadership, staff, and finally students.

- Address resistance with empathy, transparency, and support. People fear what they do not know. The more information you can provide the school community, the better. As Brene Brown says, “Being clear is kind.”

Throughout an intentional AI adoption process, district leaders should continue to individually and collectively reflect and discuss the following:

- Who’s pushing back, and why? Is it fear, lack of training, security worries, or just not seeing the point? How can we address these specific concerns?

- What are people worried about – job loss, ethical issues, how it works, or its accuracy in education? How can we build trust and explain things better to ease their concerns?

- Who’s excited about new technology, especially generative AI? What do they do and how can we get them involved? How can we create spaces for them to share their positive experiences and get others on board? How can we recognize and celebrate these early adopters?

Frameworks like Kotter’s 8-Step Change Model or the Knoster Model for Managing Complex Change can help leaders structure the rollout in a way that reduces friction and builds trust.

“Address resistance with empathy, transparency, and support. People fear what they do not know. The more information you can provide the school community, the better.”

Elevating Digital and AI Literacy Across the System

One of the greatest risks in AI adoption isn’t the technology itself—it’s the lack of preparedness among staff and students to use it critically and ethically.

A primary danger lies not in the AI tools themselves, but in the potential for their misuse or acceptance without critical evaluation. In a technology adoption or implementation significant danger lies in insufficient preparation. Unfortunately, many districts and schools continue to view digital literacy as supplementary rather than foundational to a student’s education. This stems from an old adage that students are “digital natives.”

In 2001, Marc Prensky coined the terms “digital natives” and “digital immigrants.” Digital natives are people who have grown up with access to technology and have a natural understanding of it; whereas digital immigrants were born before most technology was developed and generally face challenges when trying to adapt to digital environments. Why is this problematic? The term “digital natives” has come to imply that students come out of the womb knowing how to use technology which allows K-12 educators to abdicate their responsibilities for teaching appropriate and responsible use.

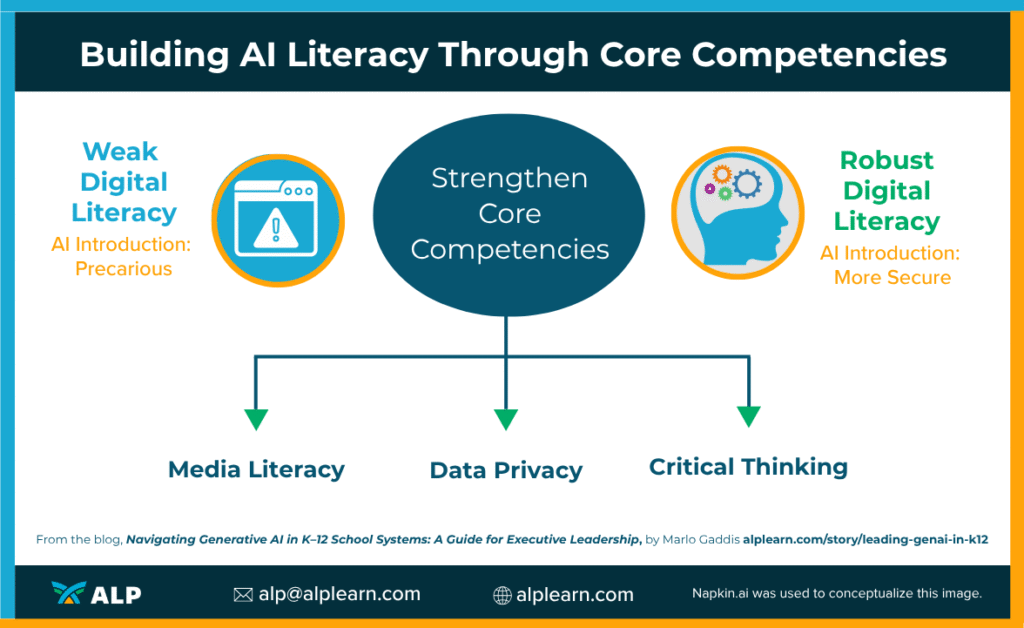

This marginalization of digital literacy has created a precarious environment for the introduction of AI. If core competencies such as media literacy (the ability to analyze and evaluate information from various sources), data privacy (understanding and managing personal information in a digital world), and critical thinking (the capacity for reasoned judgment) are not already well-established within a school community, the development of robust AI literacy will be severely hampered.

AI literacy, which encompasses understanding AI’s capabilities and limitations, recognizing its ethical implications, and using it effectively and responsibly, cannot thrive on a weak foundation of general digital competency. It requires a prior understanding of how information is created, disseminated, and consumed in digital spaces, as well as a well-developed sense of ethical conduct in technological interactions. Without a strong groundwork in these areas, the potential benefits of AI in education risk being overshadowed by its misuse, misunderstanding, or even the exacerbation of existing digital inequalities.

District leaders need to reflect individually and discuss collectively the following:

- What assumptions are we making about students’ digital skills? How do those assumptions show up in our policies or instructional practices?

- How well are our students currently taught to evaluate information, recognize bias, and question sources online? Who is doing this work?

- What structures do we have in place to teach and reinforce digital privacy, data security, and ethical online behavior? How are we ensuring these lessons are developmentally appropriate and consistently reinforced?

Consider adopting or aligning to frameworks such as:

- ISTE

- The ISTE Standards (for students, educators, education leaders and coaches)

- Computational Thinking Competencies

- AI4K12’s Five Big Ideas in AI

- Digital Promise AI Literacy

- UNESCO’s AI Competency Frameworks (for students and teachers)

This work must be scaffolded, equitable, and ongoing—not a one-and-done PD. Educators need time, space, and support to explore how AI tools affect teaching, learning, and operations. To truly prepare schools for an AI-infused future, we must invest in a long-term strategy that includes differentiated supports, collaborative learning environments, and time for experimentation. Equity means ensuring that all staff—regardless of role or background—can engage meaningfully in this work. AI literacy is not a destination—it’s a continuous journey of growth, reflection, and adaptation.

ALP has partnered with several districts across North America to design AI competencies for teachers and students aligned to a national or international framework as well as the districts’ prioritized learning model.

AI literacy, which encompasses understanding AI’s capabilities and limitations, recognizing its ethical implications, and using it effectively and responsibly, cannot thrive on a weak foundation of general digital competency.

Modeling Ethical, Transparent, and Thoughtful Use

Leaders can’t ask their teams to explore AI if they’re not using it themselves—or worse, using it in ways that undermine trust.

Effective and ethical modeling of AI tools and platforms looks like:

- Using generative AI for real work (e.g., drafting memos, brainstorming policy language) and naming when you do. Here’s an example: County of Sonoma Policy 9-6 Information Technology Artificial Intelligence (AI) Policy The use of AI is listed in the acknowledgements.

- Practicing transparency: disclosing when content is AI-generated, fact-checking results, and citing sources. Note my own AI citation at the end of this piece.

- Setting boundaries: defining what AI should not be used for (e.g., finalizing student evaluations or writing IEPs). The Georgia Professional Standards Commission recently provided their Ethical Considerations in the Appropriate Use of AI for Educators. This is a great example of effective communication of boundaries and definitions.

- Surfacing ethical dilemmas and inviting discussion, rather than making AI use invisible. In the professional learning sessions I lead, I often share a set of ethical dilemmas and have the group discuss possible solutions. Facing History & Ourselves provides a sample lesson for middle and high school students around ethics.

District leaders should reflect on the following:

- In what ways have I used generative AI tools in my own work?

- Have I shared those examples transparently with my team?

- How am I investing in my own learning about generative AI in order to lead?

Modeling is more powerful than any PD session. It sets the tone for culture and expectations. When leaders and educators actively demonstrate thoughtful, responsible use of AI, they create a ripple effect across the school community. Modeling builds trust, normalizes experimentation, and reinforces shared values in real time. It moves AI literacy from theory to practice—showing, not just telling, what intentional and ethical implementation looks like. This kind of leadership is essential to fostering a culture where continuous learning and innovation are both expected and supported.

From Experimentation to Empowerment

The generative AI journey in K–12 is not about chasing the latest tools—it’s about cultivating the leadership mindset and organizational readiness to leverage innovation responsibly.

Leaders don’t need all the answers. But they do need to:

- Ask the right questions

- Center the needs of students and staff

- Build a culture of curiosity, vulnerability, care, and co-creation

As we move forward, let’s shift from fear of the unknown to purposeful implementation—because the goal isn’t just AI adoption. It’s an educational transformation. Now is the time for staff to build confidence and capacity, for students to be empowered as ethical and creative problem-solvers, and for leaders to boldly shape the conditions that make innovation possible. This isn’t just about using new tools—it’s about rethinking what’s possible in our classrooms, our systems, and our leadership. The path forward requires thoughtful action, shared responsibility, and a clear focus on what truly benefits teaching and learning.

Want to prepare your district for AI adoption? Discover how ALP’s AI professional learning services help K-12 districts develop AI policies and leadership frameworks.

Written by Marlo Gaddis, ALP Featured Consultant | Follow Marlo on LinkedIn.

Marlo Gaddis, CETL, CCRE is a nationally recognized leader in educational technology, helping education leaders navigate the rapidly evolving digital landscape with purpose, clarity, and equity. In her work with StrategicEdu, a close partner of ALP, she supports district and state executive leaders in the strategic adoption of system-level AI solutions. Marlo serves on several advisory boards, including the Board of Directors for the Consortium for School Networking (CoSN), where she helps shape the future of technology in education.

Author’s statement on AI use:

To support the development of this blog post, I collaborated with ChatGPT by OpenAI and Gemini by Google. I used it to help organize and refine my ideas—particularly around structuring the outline, clarifying complex points, and generating reflection points for leadership. The content reflects my voice, experience, and perspective as an educational leader, with AI serving as a creative and editorial partner in the writing process.

References:

Brown, B. (2024, February 22). Clear is kind. unclear is unkind. Brené Brown. https://brenebrown.com/articles/2018/10/15/clear-is-kind-unclear-is-unkind/. Accessed 19 May 2025.

Prensky, M., & Heppell, S. (2010). Teaching digital natives: Partnering for real learning. Corwin, a Sage Company.